Article

Jan 2, 2026

The Ultimate Guide to AI Agents: Enterprise Edition (2026)

A practical guide to AI agents, explaining how they work, where they deliver value, and why enterprises are rapidly adopting them for autonomous, scalable workflows.

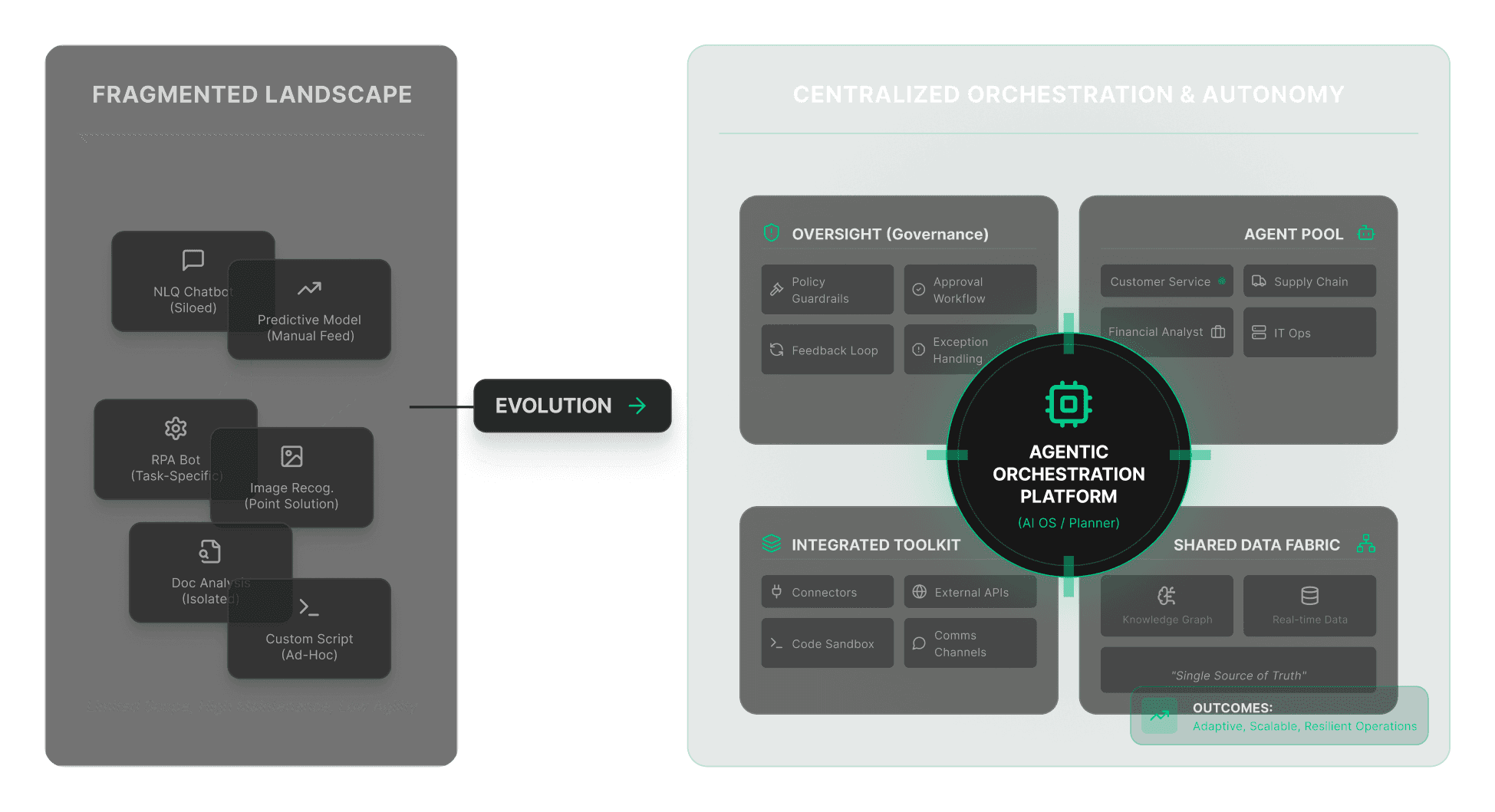

Despite billions in AI investment, a familiar paradox persists across enterprises; slow decisions, fragmented workflows, and rising coordination costs that resist automation. AI Agents are increasingly presented as the answer, yet the term is often the source of confusion. For some, AI agents refer to autonomous systems that can plan, reason, and act. For others, the label has become a catchall applied to everything, from chatbots to workflow automation. The result is a growing interest, and growing ambiguity.

This distinction matters, and clarity here determines whether agents remain experimental tools or become durable systems of record. When designed and deployed correctly, AI agents represent a meaningful shift in how work gets done inside enterprises; not because they introduce a new model, but because they change how existing capabilities are orchestrated. In practice, this marks a move from isolated task execution towards goal-oriented autonomy.

This guide is written for executives, product leaders and strategy teams who are already familiar with AI, but want a clear grounded understanding of what enterprise-grade AI agents actually are in 2026, how they work, and where they deliver real value. It offers a practical operating lens for leaders navigating the next phase of enterprise AI adoption.

What are AI Agents?

In practice, enterprises are discovering that AI agents are best understood not as a new class of models, but as a system design pattern for executing work. At its core, this pattern consists of three main component: Perception (context), Reasoning (planning), and Action (tool use).

Unlike most AI systems in production today (including chat interfaces and RAG pipelines) which are fundamentally reactive, generating outputs when prompted, then resetting, AI agents function as continuous actors; they monitor signals, maintain state (memory) and determine when to act without constant human initiation.

Two characteristics, in particular, distinguish enterprise-grade agents:

Actionability: Agents are connected to enterprise systems, allowing them to execute decisions rather than merely recommend them.

Persistence: Agents maintain state, enabling them to operate reliably across long-running, decision-heavy workflows.

Importantly, enterprise AI agents operate with bounded autonomy. Their actions are constrained by defined permissions, escalation paths, and governance controls. Autonomy here is a deliberate design choice, not an absence of oversight.

How AI Agents Work: The Enterprise Architecture View

Understanding AI agents at an architectural level is essential, because most enterprise failures do not stem from model performance, but from how systems are designed, integrated and governed.

Enterprise-grade AI agents are composed of a small number of repeatable building blocks, coordinated through an orchestration layer.

Core Components of an Agentic System

Perception: Agents begin with context; they ingest structured and unstructured data (documents, databases, event streams, APIs and external signals). Context is continuously refreshed, enabling operation in dynamic environments.

Reasoning: Agents are problem solvers that decompose goals into intermediate steps, prioritize actions, and apply decision logic to achieve defined objectives. The emphasis is not on perfect reasoning, but on sufficient reasoning to move work forward reliably and cost-effectively.

Tools & Actions: Agents are connected to tools (enterprise systems, workflows and APIs) that allow them to move beyond simple advice generation – they take action by triggering processes, updating records, and executing tasks across live enterprise systems.

Memory: Enterprise agents maintain state across time. Short-term memory tracks task processes and intermediate outcomes, while long-term memory captures historical context, prior decisions, and recurring patterns. This persistence allows continuity across time.

Feedback & Control: No enterprise agents operates unchecked. Effective systems include feedback loops such as human-in-the-loop (HITL) review, exception handling, and policy enforcement to ensure actions remain governed and auditable.

The Orchestration Layer: Where Enterprise Value is Won or Lost

While individual components matter, orchestration is the defining layer of enterprise agent systems. It governs how context is gathered, how decisions are sequenced, how tools are invoked, and how failures are handled. It coordinates actions not only between agents and tools, but also across multiple agents, working on related objectives.

This is why two systems using the same underlying model can perform very differently depending on orchestration quality, state management, and governance enforcement. In practice, orchestration determines whether an agent behaves as a reliable system or an unpredictable experiment.

Architectural Takeaway: AI agents should be evaluated as systems of coordination, not isolated components. Architecture, not intelligence alone, determines whether agents can operate safely, persistently, and at scale.

What AI Agents Are Not: Clearing Persistent Misconceptions

As AI agents gain attention, the term is often applied to systems that look similar but operate very differently.

Agents vs Chatbots: Chatbots are reactive and conversational, agents are proactive, goal-driven, and maintain state.

Agents vs RPAs: RPA systems are deterministic and rule-based, agents are adaptive and exception-aware.

Agents vs ML Pipelines: Traditional ML Pipelines produce predictions, agents translate insights into end-to-end decision execution.

Thus, AI agents do not replace existing enterprise systems. They sit above them, orchestrating models, automation, and human judgment into cohesive workflows.

Why Enterprises are Adopting AI Agents

Enterprise interest in AI agents is not driven by novelty, it is a response to structural constraints that existing AI tools have failed to resolve.

Structural Drivers

Decision Overload: Information is growing faster than human decision capacity. Agents continuously monitor signals, synthesize context, and push decisions forward.

Fragmented Systems: Enterprises run on disconnected tools and data silos. Agents act as a coordination layer, reducing handoffs and workflow friction.

High Coordination Costs: Manual follow-ups, reconciliations, and exception handling consume time. Agents automate sequencing and escalate only when needed.

Pressure for AI ROI: As AI pilots mature, organizations need measurable impact. Agents move AI from isolated experiments to sustained operational value.

Recent years are marked by better tool integration, improved controllability, and clearer governance patterns, leading to growing adoption of AI Agents. Adoption is strongest in functions characterized by continuous information flow and judgment heavy decisions, such as:

Research and Intelligence

Operations and monitoring

Finance, risk and compliance

Customer service

Product and Strategy teams

Enterprise AI Agent Patterns: Real-World Examples

The examples below illustrate common enterprise AI agent patterns observed in production deployments. These are not endorsements or recommendations.

Product | What it Does | Agentic Logic | Enterprise Value |

|---|---|---|---|

Microsoft Copilot Studio | Builds custom internal enterprise agents | Stateful, tool-enabled, governed agents | Speeds agent creation with security and control |

GitHub Copilot Workspace | Plans, writes, tests, and iterates on code tasks | Breaks goals into steps, uses development tools, maintains task state | Shortens development cycles and shifts engineers from execution to review and oversight |

Salesforce Agentforce | Executes sales and service actions inside CRM | Runs continuously, uses customer context, coordinates actions | Improves efficiency within core workflows |

ServiceNow AI Agents | Resolves IT, HR, and service cases | Maintains long-running context under governance | Improves service efficiency, through faster resolution, and scales support without headcount increase |

Across enterprise deployments, a clear pattern has emerged: Successful systems consistently emphasize orchestration, context, memory, and governance over raw autonomy. Autonomy without these elements tends to amplify risk, rather than reduce effort.

This has driven a shift from one-off copilots toward durable agent systems, embedded into intelligence and decision workflows. These systems are judged not by individual interactions, but by reliability over months of operation.

Risks, Limitations, and Governance Constraints

As AI Agents move from experimentation to production, their limitations become operational rather than theoretical. In enterprise environments, primary risks do not stem from model intelligence, but from how agents interact with systems, people and processes.

Technical Risks

Hallucinations Driven Actions: When agents are empowered to act, incorrect assumptions can translate into real-world consequences. Unlike advisory systems, errors propagate beyond outputs into system of record.

Tool Misuse and Cascading Failures: Agents operate across multiple tools and dependencies. A failure or misinterpretation in one step can cascade across workflows, if not properly constrained and monitored.

Memory Drift: Over time, agent memory can accumulate outdated or misleading context. Without mechanisms to refresh, validate, or expire memory, long-running agents risk making decisions based on stale assumptions

Organizational Risks

Over Automation: Strategic or ethical nuance is lost when judgment-heavy tasks are automated without oversight. This erodes accountability.

Trust and Adoption Gaps: If agent behavior is opaque or inconsistent, users disengage. Trust is built through predictability, explainability, and the ability to intervene.

Ownership Ambiguity: Diffused ownership across teams makes it impossible to assign responsibility for cross-functional agent failures.

Governance Imperatives: The Framework for Scale

To unlock the value of AI agents, while mitigating these risks, enterprises must adopt a Governance-First architecture.

Bounded Autonomy: Effective agents operate within clearly defined permissions and escalation thresholds. Autonomy is scoped deliberately, not granted broadly.

Audit Trails and Traceability: Every agent action should be fully traceable; what was done, why it was done, and what data sources informed the decision.

Human-in-the-Loop (HITL) Override: High-impact workflows require explicit intervention points, including approval steps or kill switches, where humans can pause or reverse agent actions.

Evaluation Beyond Accuracy: Agents should be evaluated on reliability, impact, risk exposure, and operational cost, not just model-level accuracy.

Organizations that invest in governance early unlock scale and trust; those that defer it inherit systemic risk.

How Enterprises Should Think About AI Agents Strategically

The question is no longer whether to adopt AI agents, but how to deploy them responsibly at scale. Lasting impact depends on a few critical design choices.

Guiding Principles:

Start with workflows, not agents: Redesign high-friction workflows around continuous monitoring and action, not point automation.

Design for augmentation before autonomy: Use progressive autonomy; begin with human review and expand permissions as reliability proves out.

Measure outcomes, not model metrics: Move beyond accuracy or tokens. Track business KPIs like cycle time, cost per resolution, risk detection rate, and bottom-line EBIT impact.

Treat agents as durable systems: Agents are not features or experiments. They are persistent systems that require ownership, maintenance and governance. Designing for durability from the outset avoids rework and technical debt.

As adoption matures, enterprises are increasingly moving toward multi-agent orchestration; coordinated systems of specialized agents, working together under shared governance. This shift will define the next phase of enterprise AI maturity.

What This Means for Enterprises

AI agents represent a fundamental shift in how work is executed. Competitive advantage will come not from deploying the most advanced models, but from how effectively organizations design and govern agentic systems. In practice, three factors will continue to differentiate the leaders of 2026:

Multi-Agent Orchestration: Moving beyond “one agent for one task” models, leaders build compound systems, where specialized agents coordinate tools, data, and decision steps in concert.

Architectural Sovereignty & Governance: Ensuring control and traceability as autonomy increases. Success requires grounding agents in proprietary data while maintaining strict audit trails for every action taken.

Decision Leverage: Shifting the goal from incremental efficiency to decision intelligence. Using agents to absorb complexity and scale expertise, freeing humans for high-stakes strategic judgment.

The Path Forward

The path forward calls for clear-eyed optimism. Enterprises that succeed in 2026 will be those that prioritize execution over experimentation, and responsibility over hype, treating AI agents as persistent systems requiring thoughtful design, long-term ownership, and human-centric oversight. Those that do will be best positioned to turn AI Agents into a sustained source of strategic advantage.